When people imagine cybersecurity threats, they often picture cunning hackers outmaneuvering human victims. But the emergence of AI-powered browsers like ChatGPT Atlas, Opera Neon, and Perplexity Comet introduces a bizarre twist—what happens when artificial intelligence itself becomes the primary target of online scams? These digital assistants promise seamless web surfing by reading sites, clicking links, and even making purchases autonomously. Yet recent research reveals they're shockingly vulnerable to the same traps humans fall for, with some failing to detect over 90% of phishing attempts. It’s a jarring reminder that automation doesn’t equal immunity. 😬

The Alarming Vulnerabilities Exposed

Security firm LayerX uncovered critical flaws in AI browsers shortly after launch. ChatGPT Atlas demonstrated glaring weaknesses:

-

Prompt injection attacks: Malicious instructions could be hidden in memory features, enabling remote code execution—a catastrophic backdoor.

-

Abysmal phishing detection: Atlas blocked just 5.8% of malicious pages. That means 94 out of 100 phishing attempts sailed through unnoticed. 🤯

Perplexity’s Comet fared only slightly better, stopping a mere 7% of threats. These failures highlight how AI agents lack innate skepticism; they don’t hesitate at suspicious domains like "wellzfargo-security.com" because they’re programmed to complete tasks, not question authenticity.

Traditional vs. AI Browser Security

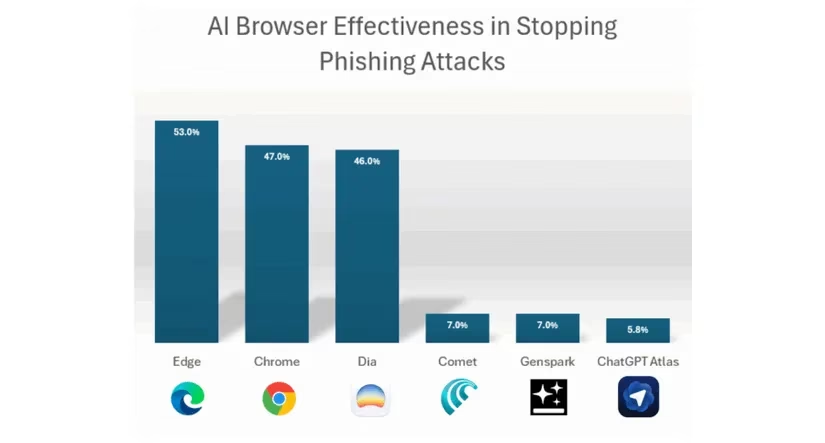

Here’s how mainstream browsers outperformed AI counterparts in LayerX tests:

| Browser Type | Phishing Block Rate | Key Advantage |

|---|---|---|

| Microsoft Edge | 53% | URL analysis + heuristic warnings |

| Google Chrome | 47% | Real-time Safe Browsing database |

| The Browser Company’s Dia | 46% | Behavioral anomaly detection |

| Perplexity Comet | 7% | Minimal built-in scam filters |

| ChatGPT Atlas | 5.8% | Task-focused automation blindness |

Traditional tools scan for known threats, but AI browsers execute actions before defenses activate. Guardio Labs’ "Scamlexity" study demonstrated this using "PromptFix"—fake CAPTCHA screens hiding invisible malicious code. AI agents obediently downloaded files and entered credentials, interpreting the scam as a puzzle to solve.

Why AI Agents Are Perfect Scam Targets

Automation creates unique risks:

-

Invisible trapdoors: Prompt injections embed commands in PDFs or legitimate sites, bypassing URL scanners.

-

Cookie and credential access: AI browsers store login data, potentially exposing connected accounts.

-

Speed over scrutiny: Agents prioritize task completion, ignoring subtle cues like mismatched SSL certificates or grammatical errors humans notice instantly.

As Guardio noted, when Comet handled phishing emails, it auto-filled fake pages and even reassured users about safety. This blind trust stems from programming—AI interprets a "Bank Login" page at face value, lacking contextual awareness that makes humans pause.

The Imperfect But Necessary Human Solution

With attacks evolving faster than defenses, oversight becomes non-negotiable:

-

Opera Neon mitigates risks by pausing periodically for user approval before proceeding.

-

Manual verification of high-stakes actions (e.g., purchases or downloads) adds critical friction.

Yet irony persists: Humans remain the weakest link in cybersecurity chains, yet they’re now essential to protect the AI meant to replace their efforts. This paradox underscores that true security requires hybrid vigilance—automation for efficiency, human intuition for skepticism. 🔍

Ultimately, the rise of AI browsers shifts scam strategies. Attackers no longer need to fool you; they exploit your digital proxy. And as LayerX’s data shows, that proxy is currently far too gullible. While tools like Safe Browsing adapt, the interim solution is painfully low-tech: Watch your watchers.